Adansonia: A Disk Usage Analyzer in Rust

Baobab is a disk usage analyzer from Gnome which I enjoy using. The right panel has nifty visualizations, but their usefulness is limited in practice. More eye candy than anything. On launch, Baobab has to crawl and index the entire directory to calculate the sizes of each file entry, but the indexing felt unnecessarily slow—I suspected it could've be done faster. Hence, I wrote my own TUI app to replicate its functionality in Rust.

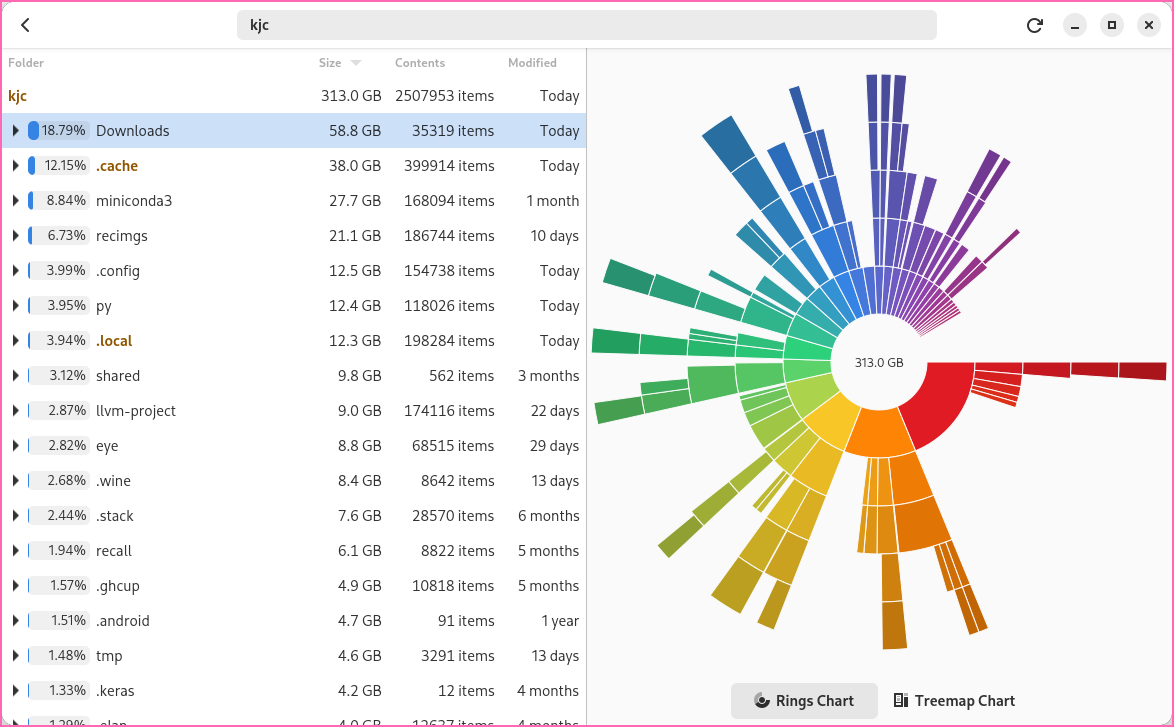

I named the TUI app Adansonia, the Latin name for a genus of trees commonly referred to as baobabs. This website now supports Asciinema casts, so here's one of my TUI app in action:

Baobab probably uses a tree-like data structure internally to represent the directory tree, because that seems like the most natural type to use here. Instead, I am employing a much dumber method that works surprisingly well—my app stores the directory tree as a dynamic array of paths with metadata. The paths are sorted later in lexicographic order, meaning we can binary search. More on this later.

#[derive(Clone)]

struct Info {

path: PathBuf,

depth: usize,

size: u64,

is_dir: bool,

}

struct Tree {

data: Vec<Info>,

}To index a directory, the scan function recursively crawls the directory and populates a Tree. I ended up implementing my own code for this instead of using a library. It was very educational. The function takes a path called root and returns a Tree.

fn scan(root: &Path) -> Tree {

...

}I was inspired by the parallel approach in the CLI tool fd. Modern SSDs can handle many concurrent I/O operations so significant speedups can be gained by multithreading. To start, we instantiate a group of workers and their corresponding stealers. We push the root path into one of the workers. We also need to remember the metadata and the device the root directory is on for later.

let root_metadata = root.metadata().unwrap();

let root_dev = root_metadata.dev();

let num_threads = 16;

let workers: Vec<_> = (0..num_threads)

.map(|_| Worker::<PathBuf>::new_lifo())

.collect();

let stealers: Vec<_> = workers.iter().map(|w| w.stealer()).collect();

workers[0].push(PathBuf::from(root));The action happens when we spawn a bunch of threads. Each takes their respective worker, clones a copy of the others' stealers, and proceed to loop forever: in each iteration, the thread tries to take a path from its own worker stack; if the stack is empty, it tries to steal from one of the other threads—and if all the stacks are empty, indicating there is nothing left to do, the thread breaks from its loop and exits.

After a thread takes a path we list its entries. The path the thread takes is always a directory. Symbolic links and entries from a different device are skipped. The entry is then appended into the result vector, and if the entry is a directory we push it onto our local worker stack. That's it. Once all worker stacks are exhausted, every thread's steal attempt fails, and we have completed.

let handles: Vec<_> = workers

.into_iter()

.enumerate()

.map(|(i, worker)| {

let mut stealers = stealers.clone();

stealers.remove(i); // remove our own stealer

stealers.rotate_right(i); // so no one stealer is swamped

thread::spawn(move || {

let mut result: Vec<Info> = vec![];

loop {

let path = worker

.pop() // try to take from local stack

.or_else(|| {

for s in &stealers {

// loop until steal is not Steal::Retry

while let Some(_) = match s.steal() {

Steal::Success(path) => return Some(path),

Steal::Empty => None,

Steal::Retry => Some(()),

} {}

}

None // if all stealers are empty, then exit thread.

});

if path.is_none() {

break;

}

let path = path.unwrap();

// sometimes fs::read_dir fails with permission error or

// whatever, in which case we just ignore the error.

let _ = fs::read_dir(path).map(|it| {

for entry in it {

let entry = entry.unwrap();

// skip symlinks and files in different devices.

let metadata = entry.metadata().unwrap();

if metadata.is_symlink() || root_dev != metadata.dev() {

continue;

}

result.push(Info {

path: entry.path().to_path_buf(),

depth: entry.path().components().count(),

size: metadata.size(),

is_dir: metadata.is_dir(),

});

if metadata.is_dir() {

worker.push(entry.path().to_path_buf());

}

}

});

}

return result;

})

})

.collect();There are multiple deficiencies with this code—it doesn't account for hard links, if the first thread pops the root path then there is temporarily no work to do and other threads might exit, etc. But it seems to work fine for now.

Anyway, the last bit of code in the scan function joins the threads and returns the combined results. The fast parallel sort function from the rayon library sorts the final result vector in lexicographic order—this is important, because it allows us to binary search the vector.

let mut result = vec![Info {

path: root.to_path_buf(),

depth: root.components().count(),

size: root_metadata.size(),

is_dir: true,

}];

for handle in handles {

result.append(&mut handle.join().unwrap());

}

result.par_sort_unstable_by(|a, b| a.path.cmp(&b.path));

return Tree { data: result };Now that we have a flat array of paths, we must "accumulate" in the bottom–up direction the sizes of each entry under the directories. Since the array is in order, a linear traversal accomplishes this quickly. Even for millions of paths, it only takes ~10ms. I'm unsure of its correctness, in that there are probably edgecases relating to exotic filenames where this procedure fails, but the results seem to match the du command line tool well enough.

To list the entries in a directory we can binary search the start and end ranges of the directory then filter for only the entries on the same depth level as the directory. Surprisingly, even for the home folder with millions of paths, this linear filtering process does not induce a noticeable latency.

impl Tree {

fn accumulate(self: &mut Self) {

let mut sums: [u64; 4096] = [0; 4096];

let mut prev_depth = 0;

for i in (0..self.data.len()).rev() {

let depth = self.data[i].depth;

if depth < prev_depth {

self.data[i].size += sums[prev_depth];

sums[prev_depth] = 0;

}

sums[depth] += self.data[i].size;

prev_depth = depth;

}

}

fn get(self: &Self, p: &Path) -> Vec<Info> {

let start = self

.data

.binary_search_by(|x| x.path.cmp(&p.to_path_buf()))

.unwrap();

let end = self

.data[start..]

.partition_point(|x| x.path.starts_with(&p.to_path_buf()));

let target = p.components().count() + 1;

let mut items: Vec<Info> = self.data[start..start + end]

.iter()

.filter(|x| x.depth == target)

.cloned()

.collect();

items.sort_by(|a, b| b.size.cmp(&a.size));

items

}

}And that's it. The rest of code deals with the TUI, and is of no interest. Check out the GitHub repo if you're interested in trying it out or seeing the full code. Flat arrays are fast, surprisingly so. Computers are fast.